For a while now, I’ve been using this little clamshell as my private traveling machine, I was dragging it along as far as Japan and in general, it never let me down. Granted, the battery lifetime isn’t great, the shrunk keyboard isn’t for writing a thesis and the 2 core baytrail Celeron isn’t the best-performing mobile CPU. But it’s tiny housing makes it fit into my A4-sized bag, it has a touch screen and with the 4G of main memory it even runs Visual Studio 2015 community at a decent speed. It also has an Ethernet port, a HDMI port, an SD-Card reader and an USB3 Host. And all this without adapters, dongles, port replicators etc. It’s even got 2.4 and 5GHZ WIFI and Miracast.

The main drawback the machine has is its 500GB HGST spinning disk. But this was about to change.

So I found a 128 GB Sandisk SSD (Z400), 2.5’’ SATA at reichelt.de for a reasonable price. Its 7mm housing is the same size as the internal HGST drive, so I ordered one.

Now SanDisk offers a number of software packages that make your life easier with the SSD, the most important one is their SSD Dashboard. Inside, it also contains a link to a single use version of a harddisk to SSD Transfer software. So I downloaded the dashboard, hooked up the SSD to an USB to SATA converter and fired up the transfer software to check if this setup would be ok. But before making actual changes, I ran the disk2vhd tool from sysinternals to capture a full disk image of the internal harddisk to an external drive.

Now in order to do the actual transfer, I removed a lot of things from the old harddrive. I changed the OneDrive config to not keep anything local (down 20 GB), removed all local media files (down another 60GB), uninstalled some older versions of software (VS2010) and cleaned up my downloads directory. A very helpful tool for this process is windirstat that I just ran in a portable version. (I actually keep it around in the tools directory of my OneDrive.)

After having shrunk down the content of the C drive, I found that I still had a D drive that the transfer software insisted to move to the SSD. Now on the Medion akoya, that’s actually the recovery drive used to reset the machine to its initial state that it came in which was Windows 8. Now I never planned to go back to that, so I decided to remove the partition to save some precious SSD space. Note that if you do that, the recovery function of the notebook that’s triggered by holding down F11 upon boot will not work anymore. But I decided that I’ll be fine with using the build-in recovery mechanisms of Windows 10. But that’s up to everyone to decide for himself.

So I then fired up the transfer software and a few hours later, I had a SSD with the content of my harddrive. So I disconnected the SSD in its USB-Sata housing and then shut down the machine.

The disassembly process was actually very smoth and simple, essentially it was removing the screws on the bottom and then using my trusty iFixit Spudger to carefully pry open the plastic housing. After that, it was just two more screws to remove the harddisk frame, pulling out carefully the SATA cable and then a few more screws to take the harddisk out of the frame. I then mounted the SSD into the frame, fastened the screws, put the harddisk into its place in the housing, carefully attached the SATA cable, fastened the screws of the harddisk frame, then put on the plastic cover and tightened all the remaining screws. Needless to say I did all this with the machine shut down, the power supply disconnected and paying attention not to damage the LiPo battery since these can get rather nasty when punctured in the wrong spot. (make that: in any spot!).

Booting up the machine initially got me a boot failure (probably since Windows 10 actually doesn’t shut down on “shutdown”, but actually hibernates, but the “shrinking process” left the SSD with a stale hibernation image that Windows correctly refused to restore) but the second boot was all right and from then on everything worked as it should.

Or almost…

Working with the machine a few hours made me notice a strange behavior. A couple of times every hour the machine would “freeze” for a few seconds and almost do nothing. But the mouse cursor was still working (so no blocked interrupts) and even the GUI of some apps was still responsive, but other apps just froze. Even Windows would sometimes gray them out and show “not responding” dialogs.

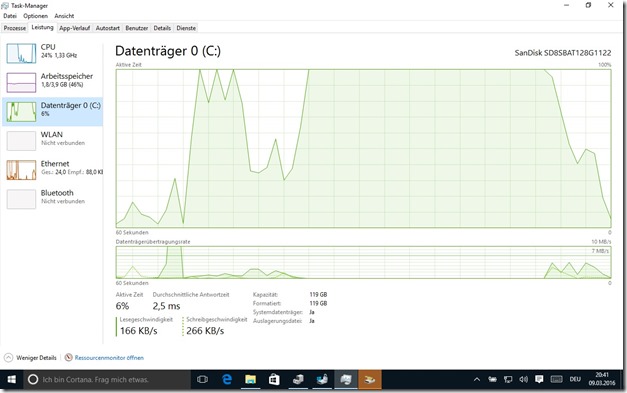

And then I noticed that during this time, the HDD LED was full on. Not the usual flicker when the disk was working but just lighting up steadily. So I fired up the task manager and looked for processes with unusual activity. There were a few random processes that seemed to be “stuck” on I/O, but there was no clear pattern. So I switched over to the “Performance” tab and took a look at the Disk IO graph. And whenever the system behaved “frozen”, the disk activity percentage graph would be stuck at 100% busy while the throughput graph would show zero throughput. After a couple (10 to 40) seconds the activity would drop and the throughput would go up as if nothing had happened.

After watching this for a couple of days (and even seeing one or two bugchecks (AKA blue screens) during disk activity, I decided to involve Sandisk’s support.

After a couple of obvious starter questions (have you tried using a different SATA connection? No, I only have one in my notebook. Is the BIOS/OS/Drive Firmware up to date? Yes, I checked in your SanDisk Dashboard!) Sandisk recommended that I format the harddisk and reinstall everything. So I actually did what they asked me to do on the idea that maybe with the initial windows 8 install and the insider updates and then the final Windows 10 bits installed there was something “stuck” in the driver versions installed.

After re-installing Windows 10 (which amazingly worked without any major trouble, Windows recognized my already-activated Windows 10 license I got by upgrading the machine from Windows 8, I even did not have to install a single driver by hand since they now seem to all be available in Windows Update!) I started checking for the presence of the bug. And yes, it was still there, on my clean install machine. Here’s a screenshot of how this looks in the task manager: Disk 100% busy, no data transfer. In this case for about 45 seconds.

So I started looking at the documentation of the Z400 drive at the Sandisk website. To be precise, it’s a SanDisk SD8SBAT128G1122Z 128G

Turns out, it’s actually not a consumer drive, it’s mostly meant for embedded OEM systems like point-of-sales terminals (aka cash registers). And then I dug some more and found a standalone firmware updater for the drive called “ssdupdater-z2201000-or-z2320000-to-z2333000”. Wait! Didn’t the Sandisk dashboard just tell me that there was no firmware update? But the same dashboard told me that the firmware revision of my drive was z2320000. OK, maybe the ssd dashboard does not know about these embedded drives and only knows about consumer drive firmware updates. So I downloaded and ran the standalone firmware updater and voila: The bug disappeared, no further bluescreens and the machine feels about 5 times faster than before.

So, my lessons learned for today: Don’t trust support too much, especially if going through consumer/end-user channels. You might have hardware they don’t even know about. And don’t trust their tools. You might get wrong answers.

To be precise, the Sandisk support was really quick to answer for a consumer query that came to them via a web form. The answers were professional and to the point without any useless chitchat, but if the answer isn’t available to them, they simply can’t help. So it would be great if either Sandisk could enhance their SSD dashboard tool to give correct answers or enhance their support database so that this bug can be found. Because I’m pretty sure it is documented somewhere in the bug list of firmware Z2320000 or the release notes of firmware z2333000.

Hope this helps,

H.